Decoding Brain Signals with Machine Learning and Neuroscience

Become Professor X and unlock the secrets of our mind

on Unsplash](/assets/img/posts/control-exoskeleton-with-your-brain-01.jpg)

In the X-Men comics, Professor Charles Xavier is one of the most powerful mutant. He possesses the mental power to read minds and move things.

Want to become Professor X? Read on!

Our brain is a powerful organ, it is the command centre of the human nervous system. It works like a big computer, it sends, receives and processes information.

What if we can intercept these signals? By creating a device to read the mind, you can unlock the power of TELEPATHY!

A brain-computer interface allows the decoding of our intentions using our brain signals. This means you don’t even have to move a muscle!

Imagine this, if I would like to send a text message. I begin by staring at the keyboard, on the letters I want to type. And my phone starts typing the words and sentences I am thinking about!

This might seem like science fiction, would you think that humanity is still years away from achieving such feat? Scientists have been developing and refining this technology for decades.

In this article, let me share with you about an exciting research work where we can control an exoskeleton by staring at blinking lights!

The occipital lobe

The human brain is an amazing three-pound organ that controls all our body functions. It processes all our thoughts, it is the neurobiological basis of human intelligence, creativity, emotion, and memory. Our brain is divided into several parts, and each part has a primary function.

]](/assets/img/posts/control-exoskeleton-with-your-brain-02.jpg)

For this experiment, our focus is on the occipital lobe. That is our visual processing centre, the part that handles our vision. It processes and enables our brain to recognise what we are looking at.

The type of data we can collect from our brain

The purpose of a brain-computer interface (BCI) is to have a direct communication pathway between the brain and an external device. That enables its users to interact with computers by mean of brain-activity.

BCI isn’t a mind-reading device like a Cerebro. Instead, it detects the changes in the energy emitted by the brain. A human brain contains about 86 billion neurons, each individually linked to other neurons. Every time we think or move a muscle, these neurons are at work, activated with energy. A BCI recognises these energy patterns in the brain.

](/assets/img/posts/control-exoskeleton-with-your-brain-03.jpg)

Electroencephalogram (EEG) is a popular technique for recording signals from our brain. It is non-invasive, so we don’t need to cut open our skull to collect our brain signals.

EEG records the energy generated by the brain using a series of electrodes placed on the scalp. This involves a person wearing an EEG cap with electrodes placed at specific points. These electrodes detect brain activity, which is electrical energy emitted from our brain.

For this experiment, we want to record the brain signals related to what our eyes are looking at. By placing the electrodes in the occipital lobe region, the electrodes will pick up signals from what we are seeing. In this case, flickering lights. This type of EEG signals is called steady-state visual evoked potential.

Steady-state visual evoked potential

Steady-state visual evoked potential (SSVEP) are signals generated when we look at something flickering, typically at frequencies between 1 and 100 Hz. In this experiment, these flickering lights are blinking LED lights. These blinking lights are “stimuli”.

Consider a brain-computer interface system where the goal is to decode the user’s input for one of the two possible choices, “left” or “right”. There are two stimuli, one for selecting the “left” option and another for the “right”.

The two stimuli are flickering at a different frequency, 11 Hz represents a “turn left”; while “turn right” is at 15 Hz. Users pick the options by focusing on one of the stimuli. For example, by focusing on the “left” stimulus, to select the “left” option.

When the user is focusing on one of the stimuli, the frequencies of that specific stimulus can be picked up at the occipital lobe. We can determine which lights the user is focusing on by extracting the stimulus’ frequency from the EEG signals. That is how a BCI system can interpret SSVEP brain signals into instructions for external devices.

This video shows a live demo on how SSVEP signals can be affected by what our eyes are focusing on.

Experimental setup

Korean University designed an experimental environment for controlling a lower-limb exoskeleton using SSVEP. Users can control the exoskeleton by focuses their attention to the desired stimuli.

The user can choose one of the five actions available to operate the exoskeleton. This corresponds to the five light-emitting diodes flickering at different frequencies.

- walk forward (9Hz)

- turn left (11Hz)

- turn right (15Hz)

- stand up (13Hz)

- sit down (17Hz)

]](/assets/img/posts/control-exoskeleton-with-your-brain-04.jpg)

If the intention is to move forward, the user focuses on the LED diode that is blinking at 9 Hz. Likewise, by focusing on the LED diode blinking at 15 Hz, it will operate the exoskeleton to turn right.

During the experiment, there are voice instructions provided to guide the user. Their task was to follow the instructions given, and operate the exoskeleton according, by focusing on the corresponding LED light.

]](/assets/img/posts/control-exoskeleton-with-your-brain-05.jpg)

To build a supervised learning classifier, EEG signals collected are input data, and the assigned tasks are the labels. For this experiment, the authors have chosen eight electrodes on the EEG cap, this corresponds to the eight channels in the input data.

They also performed a fast Fourier transform to convert the signal from the time domain to the frequency domain. That resulted in 120 samples in the input data. So, the input data is a 120x8 dimension signal.

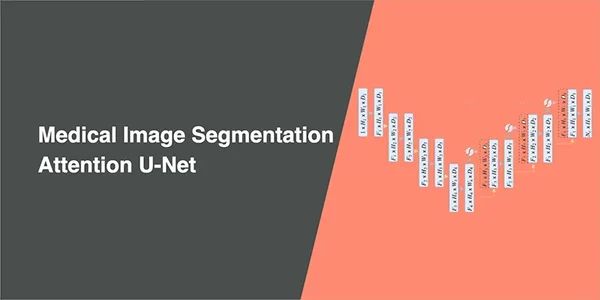

Convolutional neural network classifier

No-Sang Kwak et al. proposed a robust SSVEP classifier using a convolutional neural network. In the paper, they named it CNN-1. It has two hidden layers with kernel size 1x8 and 11x1, respectively. These are followed by an output layer with 5-units, which represents the five possible actions for the exoskeleton movement. The learning rate was 0.1 and weights were initialised with a normal distribution.

]](/assets/img/posts/control-exoskeleton-with-your-brain-06.jpg)

The authors also implemented two other neural networks and three signal processing methods to compare the performance against CNN-1:

- CNN architecture #2 (CNN-2): similar to CNN architecture described, but it includes an additional 3-units fully connected layer before the output layer

- Feedforward (NN): a simple 3-layer fully connected feedforward neural network

- Canonical correlation analysis (CCA): canonical correlation analysis is a popular approach to find a correlation between the target frequency and the signal. CCA has always been the method of choice for SSVEP classification

- Multivariate synchronisation index (MSI): multivariate synchronisation index estimate the synchronisation between two signals as an index for decoding stimulus frequency

- CCA + k-nearest neighbours (CCA-KNN): canonical correlation analysis with k-nearest neighbours

These methods are used for comparing the performance against the above described CNN-1 architecture. I did not provide details for each classifier because CNN-1 has the best performance, and that is our focus.

Evaluation

The authors performed 10-fold cross-validation with 13500 training data and 1500 test data. This table shows the classification accuracy for each classifier.

]](/assets/img/posts/control-exoskeleton-with-your-brain-07.jpg)

This table shows that CNN-1 has outperformed other neural network architectures. CNN-1 has also performed better than CCA, which is a popular method for SSVEP classification. Overall, the results of the neural network are more robust than CCA, as CCA exhibits significantly lower performance.

Deep neural networks generally perform better with large amounts of data. To find out the amount of data required to outperform traditional methods. The authors validate the performance with various training sample size.

]](/assets/img/posts/control-exoskeleton-with-your-brain-08.jpg)

CNN-1 has outperforms other neural networks for every data quantity. However, CCA-KNN shows better classification performance for fewer than 4500 training data samples.

To wrap up

In this study, the aim is to build a robust BCI system with a deep learning classifier. The convolutional neural network has exhibited promising and highly robust performance for SSVEP classification.

BCI systems have a great potential to assist people with disabilities to control devices such as an exoskeleton (like an Ironman suit) or a wheelchair (like Professor X).

But constructing a reliable BCI system is challenging, and significant effort is still needed to bring these devices from the laboratory to the mass market.

on [Unsplash](https://unsplash.com)](/assets/img/posts/control-exoskeleton-with-your-brain-09.jpg)